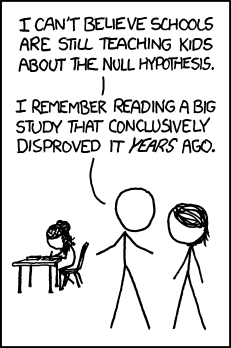

Inspired by a post on Andrew Gelman’s blog, The harm done by tests of signficance:

The first four steps to understanding cause and effect:

- Formulate your signal hypotheses,

where i = 1, …, n.

where i = 1, …, n. - Fit your signal models to your data,

. Obtain model parameter values,

. Obtain model parameter values,  , under each model.

, under each model. - Reality-check your fit results. Does at least one of the fit models do a decent job of fitting the data? (If

is crazy low for all of your signal hypotheses then either you’ve got a highly anomalous observation on your hands or your signal hypotheses do not include the one which gave rise to the data.)

is crazy low for all of your signal hypotheses then either you’ve got a highly anomalous observation on your hands or your signal hypotheses do not include the one which gave rise to the data.) - Compute posterior probabilities:

![]()

Get through those steps and you may have a story to tell. Continue reading